This post introduces a testing technique called golden tests, snapshot tests, or characterisation tests. The technique can be a handy tool in your testing toolbox for maintaining invariants across versions of a piece of software.

Introductory examples

Golden tests are applicable in a surprisingly wide range of scenarios. Here we will look at some examples before digging into what they have in common.

Consistent hashing

The smos-scheduler tool uses the hash of a schedule to identify .smos files that have been scheduled by itself previously.

header: Weekly Review properties: schedule-hash: '3065399901563069906'

Recently I upgraded smos to a newer version of its Haskell dependencies. The hashable dependency was upgraded and its hashing function changed. As a result, all the schedules were rerun the next time that I ran smos-scheduler.

To try to prevent such an issue from reoccurring in the future, I added a golden test. This test hashes a given schedule and asserts that its hash is exactly the same as a given string. The string is "just" the current output of that hash function.

spec = do

it "produces the exact same hash, consistently" $

renderScheduleItemHash [...]

`shouldBe` "sARhcXIVaaVp94P3nKt4HkR8nkM6HgxrwpY5kb3Lvf4="If the hash function changes again in the future, this test will (probably) fail.

Optimisations

Database optimisations, and optimisations in general, can be finicky. Some compilers will have a way of spitting out information about the optimisations they are using.

Postgres is an example of a database that can output this information to a file. These files, in turn, can then be committed and checked against.

Parsed test spec with 4 sessions starting permutation: d1a1 d2a2 e1l e2l d1a2 d2a1 d1c e1c d2c e2c step d1a1: LOCK TABLE a1 IN ACCESS SHARE MODE; step d2a2: LOCK TABLE a2 IN ACCESS SHARE MODE; step e1l: LOCK TABLE a1 IN ACCESS EXCLUSIVE MODE; <waiting ...> step e2l: LOCK TABLE a2 IN ACCESS EXCLUSIVE MODE; <waiting ...> step d1a2: LOCK TABLE a2 IN ACCESS SHARE MODE; <waiting ...> step d2a1: LOCK TABLE a1 IN ACCESS SHARE MODE; <waiting ...> step d1a2: <... completed> step d1c: COMMIT; step e1l: <... completed> step e1c: COMMIT; step d2a1: <... completed> step d2c: COMMIT; step e2l: <... completed> step e2c: COMMIT;

The plutus compiler does something similar, where they check intermediate output against previous versions to notice when the optimiser gets worse.

Compiler error messages

Users' main interaction with compilers happen through error messages. As such, compiler authors spend a lot of time working on making error messages great. One technique that can help with this, is to output one of each type of error message to a separate file and commit those files. When those messages change across versions, even by accident, we can see so those changes in the commit diff.

GHC does something like this, and you can see this in the should_fail subdirectories of its test suite. For example, see the output for this obscure error message:

T10826.hs:7:1: error:

• Annotations are not compatible with Safe Haskell.

See https://gitlab.haskell.org/ghc/ghc/issues/10826

• In the annotation:

{-# ANN hook (unsafePerformIO (putStrLn "Woops.")) #-}

When error messages are improved in a given commit, this can even be seen in the commit diff!

Encoded representation

The Smos editor writes .smos files that need to be readable years from when they are written. These files are text files, so this is always definitely possible, but it would be even nicer if Smos could still open them as well.

Smos keeps track of the versions of its data format that can read and write. It also has some example data that is output for the current data format. This way, the test can fail if Smos unexpectedly outputs the same data differently:

- header: hello world

contents: |-

some

big

contents

timestamps:

DEADLINE: 2021-03-13

SCHEDULED: 2021-03-12

properties:

client: cssyd

timewindow: 30m

tags:

- home

- online

Furthermore, Smos outputs a generalised data schema for its data format in the same way as well. For example, here is the golden output for the logbook data schema:

def: Logbook

# Logbook entries, in reverse chronological order.

# Only the first element of this list has an optional 'end'.

- # LogbookEntry

start: # required

# start of the logbook entry

def: UTCTime

# %F %T%Q

<string>

end: # optional

# end of the logbook entry

mref: UTCTime

This schema is checked against the current schema, so that the build can fail if the schema changes unexpectedly.

API Specification

We can take this idea of golden data schema even further. When generating an OpenAPI3 Specification of an API from code, we can commit this specification to the repository as well.

This way, the API cannot be changed by accident without that showing up in commit diffs. It also allows code reviewers to see the impact of code changes on the API.

Furthermore, we can use this committed data to statically host an API explorer such as SwaggerUI.

Pretty web page

I find it very difficult to make any website look good, so I tend to make a page look decent by using a CSS framework and/or by asking help from others. After that, I fear accidentally changing how a page looks and not noticing.

To help prevent this issue, Social Dance Today has screenshot golden tests.

The test runs as follows:

The web server is started with a blank database

The test runner populates the database with example data

A selenium web-driver navigates to the page to find a rendering of this data

The screen size is set to given dimensions.

The web-driver takes a screenshot.

If the screenshot differs from the golden screenshot, the test fails.

These are two screenshots from my test suite.

They use different screen dimensions, to make it easy to check that a page still looks good on various devices.

Golden tests in general

Recall that tests aim to fail to assert that a certain defect exists.

The defects that golden tests are trying to find are those that cause a certain result to change since the version that produced the previous result.

In other words, the tester is trying to maintain an invariant across commits. Furthermore, they use these tests to ensure that someone has to sign off on the invariant being broken.

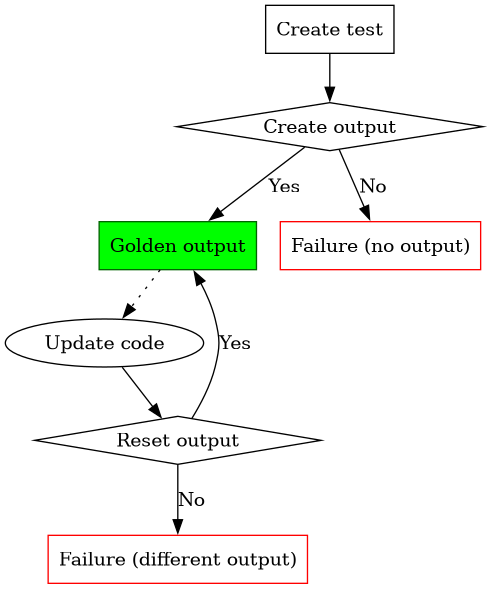

A golden test behaves as follows:

Produce the current output

If golden output does not exist

Fail the test; and

Offer to create it

If golden output exists, compare the golden with the current output

If the current output differs from the golden output

Fail the test; and

Offer to update the golden output.

Golden tests provide several features:

The output cannot change by accident. Either the test fails, and CI can fail, or the changes are reflected in the commit diff.

The tester does not have to produce the first golden output themselves, they can have the golden test create it for them.

The tester does not have to produce ongoing golden updates themselves, they can have the golden test create it for them.

The output does not have to be parse-able. The test compares two versions of output, the output doesn't need to be parsed to execute the test.

(It is important that the golden test fails if the golden output is missing, because then the test can fail if the golden output is not included in the test execution context by accident.)

Golden tests with Sydtest

Golden tests often get special treatment in testing frameworks. For example, in Haskell there are libraries for golden tests with tasty or with hspec. In sydtest, golden tests are built-in, so they won't require any additional libraries."

To write a golden test with Sydtest, you can have a look at the Test.Syd.Def.Golden module

For example, you could write a golden test for the version of your data model:

import Test.Syd

dataModelVersion :: String

dataModelVersion = "v0.1"

spec :: Spec

spec = do

describe "dataModelVersion" $

it "hasn't unexpectedly changed" $

pureGoldenStringFile "test_resources/data-model.txt" dataModelVersionThe first time you run this test, you get a failure:

dataModelVersion

✗ hasn't unexpectedly changed 0.18 ms

Golden output not found

So you rerun the test with --golden-start and see:

dataModelVersion

✓ hasn't unexpectedly changed 8.25 ms

Golden output created

You'll see that test_resources/data-model.txt has been created and contains the string v0.1.

$ cat test_resources/data-model.txt v0.1%

Now we "accidentally" update the dataModelVersion to v0.1 and get:

file.hs:9

✗ 1 dataModelVersion.hasn't unexpectedly changed

Expected these values to be equal:

Actual: v0.2

Expected: v0.1

The golden results are in: test_resources/data-model.txt

But because we're certain this is intended, we pass --golden-reset and see:

dataModelVersion

✓ hasn't unexpectedly changed 0.25 ms

Golden output reset

Now we see in git diff that the golden output has changed:

$ git diff -- test_resources/data-model.txt diff --git a/test_resources/data-model.txt b/test_resources/data-model.txt index 085135e..60fe1f2 100644 --- a/test_resources/data-model.txt +++ b/test_resources/data-model.txt @@ -1 +1 @@ -v0.1 +v0.2

Conclusion

Write golden tests.

If you write any cool tests, feel free to tweet them at me! I'm always interested in learning more about testing techniques.