All CS SYD services (Intray, Tickler, Smos, Social Dance Today, ...) were down on 2023-08-08 between 12:30 and 18:00 Zürich time. This is a postmortem of the outage.

Full story

Symptoms

Around 12:30, I get Intray notifications saying that my services are unreachable. I browse to https://cs-syd.eu and don't get a response. I try to ssh into the machine, and the command hangs.

Because I had a medical appointment at 13:00, I decided I would try to reboot the EC2 instance that hosts these services, and investigate when I got back.

After the services were rebooted, all services responded with 502: Bad Gateway.

Investigation

Once I got back from the medical appointment, and still saw the 502 responses, I tried ssh-ing into the machine again. This time I was able to get in, and run systemctl status.

The status showed degraded, as expected. I noticed that nginx was still running, which explained the 502 responses. I also noticed that none of my services were running.

Indeed the intray-production-web-server service, for example, was restart-looping. I saw an error in the journalctl logs that said cannot execute binary file: Exec format error.

This is an error that occurs when you try to run a 64-bit executable on a 32-bit platform. I'd never seen this error on this machine before, and machines don't magically change their architecture, so I figured "that can't be it".

Next up, I ran file on the executable, and saw (newlines added for clarity):

nix/store/0pb973llpgg42159g5x48bsrz8hw0gvw-intray-web-server-0.0.0.0/bin/intray-web-server: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /nix/store/yaz7pyf0ah88g2v505l38n0f3wg2vzdj-glibc-2.37-8/lib/ld-linux-x86-64.so.2, for GNU/Linux 3.10.0, stripped

This was definitely a 64-bit executable. I also tried to ldd it, and saw:

$ ldd /nix/store/0pb973llpgg42159g5x48bsrz8hw0gvw-intray-web-server-0.0.0.0/bin/intray-web-server not a dynamic executable

This was extremely weird because I knew that this wasn't a statically linked executable. I double-checked it by copying that executable to my local machine, and indeed on my machine I saw:

~/debugging $ ldd intray-web-server linux-vdso.so.1 (0x00007ffe083e1000) libsqlite3.so.0 => /nix/store/64pyqgnjar1cdqg2l5kim7bngqpggx63-sqlite-3.41.2/lib/libsqlite3.so.0 (0x00007f391199b000) libpthread.so.0 => /nix/store/yaz7pyf0ah88g2v505l38n0f3wg2vzdj-glibc-2.37-8/lib/libpthread.so.0 (0x00007f3911996000) libz.so.1 => /nix/store/hr3m53r0nhyqx80sg0bz9xjgk6jg009k-zlib-1.2.13/lib/libz.so.1 (0x00007f3911977000) libgmp.so.10 => /nix/store/0ssnwyy41aynhav7jr4dz1y01lfzi86f-gmp-with-cxx-6.2.1/lib/libgmp.so.10 (0x00007f39118d7000) libc.so.6 => /nix/store/yaz7pyf0ah88g2v505l38n0f3wg2vzdj-glibc-2.37-8/lib/libc.so.6 (0x00007f39116ef000) libm.so.6 => /nix/store/yaz7pyf0ah88g2v505l38n0f3wg2vzdj-glibc-2.37-8/lib/libm.so.6 (0x00007f391160f000) librt.so.1 => /nix/store/yaz7pyf0ah88g2v505l38n0f3wg2vzdj-glibc-2.37-8/lib/librt.so.1 (0x00007f391160a000) libdl.so.2 => /nix/store/yaz7pyf0ah88g2v505l38n0f3wg2vzdj-glibc-2.37-8/lib/libdl.so.2 (0x00007f3911605000) libffi.so.8 => /nix/store/mnq0hqsqivdbaqzmzc287l0z9zw8dp15-libffi-3.4.4/lib/libffi.so.8 (0x00007f39115f8000) /nix/store/yaz7pyf0ah88g2v505l38n0f3wg2vzdj-glibc-2.37-8/lib/ld-linux-x86-64.so.2 => /nix/store/46m4xx889wlhsdj72j38fnlyyvvvvbyb-glibc-2.37-8/lib64/ld-linux-x86-64.so.2 (0x00007f3911aec000)

I looked at journalctl as well, and saw this:

Failed to create cgroup /system.slice/intray-web-server-production.service: Cannot allocate memory

However btop (which worked) showed that I was only using 25% of the machine's available RAM.

I also run df -h and the disk was not full either:

$ df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 100M 0 100M 0% /dev tmpfs 991M 0 991M 0% /dev/shm tmpfs 496M 3.3M 492M 1% /run tmpfs 991M 408K 990M 1% /run/wrappers /dev/disk/by-label/nixos 32G 14G 17G 45% / tmpfs 199M 0 199M 0% /run/user/0

At this point I thought that maybe my disk had been corrupted and the nix store might have been missing files or parts of files. However, the intray-web-server file I got from the server matched the intray-web-server file that I got from my own nix store.

I also tried to nix copy all the relevant store paths to the machine again. I saw the disk usage increasing, so I thought that might have been the solution, but in the end it did not change anything.

Architecture rabbit hole

I decided to investigate the "wrong architecture" idea again.

I first tried nix-info and was surprised to see:

system: 'i686-linux'

This seriously surprised me because my flake.nix said system = "x86_64-linux" and that had worked before. I tried figuring out where nix-info got this information but could not figure it out easily. Using strace did not show me any files that it'd be reading this information from. (In hindsight I now know that programs can ask this information from the kernel directly, but I hadn't thought to look for that in the strace output.)

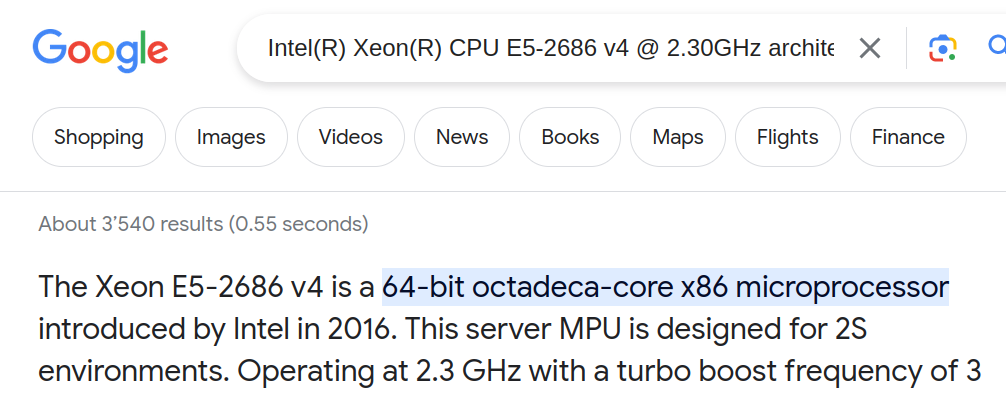

I was still convinced that this information was wrong, so I tried looking up the processor info in /proc/cpuinfo:

processor : 0 vendor_id : GenuineIntel cpu family : 6 model : 79 model name : Intel(R) Xeon(R) CPU E5-2686 v4 @ 2.30GHz

I looked up this chip and saw that it was indeed a 64-bit processor:

How could /proc/cpuinfo and nix-info disagree?!

It turns out that you can run a 32-bit kernel on a 64-bit processor. But that would mean that I was running a 32-bit kernel and I'd configured system to be x86-64_linux?!

I looked into /run/booted-system/system and, to my great surprise, found:

i686-linux

I somehow managed to deploy a 32-bit system to a 64-bit machine, without noticing, and only find out an unknown amount of time later.

I double-checked that I was building a 64-bit system in my flake:

$ nix build .#nixosConfigurations.default.config.system.build.toplevel $ cat result/system x86_64-linux

At this point I was considering that maybe the kernel had magically changed size. (I thought I was going a bit crazy, to be honest.) But, as my favourite engineer likes to remind me:

There is no magic, you can always go figure it out.

So I continued to investigate.

Deploying with NixOps

I tried to deploy my machine again, but this time without any of the services:

nixops deploy

Deployment succeeded, so I celebrated! Then I rebooted the instance and checked /run/current-system/system and saw:

i686-linux

How could this be? I had just built an x86_64-linux system, but by the time I'd deployed it, it had turned into an i686-linux system.

I hypothesised that maybe the automation that changes the boot sequence was built for a 64-bit system and would therefore fail to change the boot sequence when run on a 32-bit system.

To rule this out, I tried manually adding an option to /boot/grub/grub.cfg based on the system that I had nix copy-ed over to the machine manually. When I rebooted now, the machine booted into a 64-bit system, yay! Indeed, nix-info showed x86_64-linux.

Except after I redeployed again with nixops deploy, nix-info showed i686-linux again. This meant I could exclude the "boot sequence automation" hypothesis.

It also meant that I had my next lead: nixops. I tried running nixops deploy with --build-only to see what it built, and to my great surprise it built something different than what was in my flake.

Indeed nixops deploy deployed a 32-bit system eventhough I had clearly specified system = "x86_64-linux". I tried specifying system in different places; The pgks that NixOps came from, the deployement, and the machine specification itself. None of these options had any impact on whether NixOps would build a 32-bit system.

At this point I spent a bit more time trying to get nixops to build a 64-bit system, but in the end I realised that I could "just" use nixos-rebuild to fix the issue:

$ nixos-rebuild switch --flake .#default --target-host root@instance --build-host localhost

Hindsight

In hindsight I realised that the original "wrong architecture" error made a lot of sense because I was running a 32-bit kernel.

It also made sense /proc/cpuinfo and nix-info disagreed because I was running the wrong kernel for the system.

It made a lot of sense that the instance did not switch into the system that I wasn't deploying.

I theorise that, with the switch from NixOps 1 to NixOps 2, I'd been deploying a 32-bit kernel but still running a 64-bit kernel because I'd never rebooted the machine. This would explain why the problem never showed up until I rebooted the instance.

Remaining unknowns

I still don't understand why ldd showed the binary to be a static executable. Maybe because a 32-bit ldd can't investigate 64-bit executables but just silently shows an incorrect result?

I still don't know why nixops could only build a 32-bit executable. For the record, this is nixopsUnstable (NixOps 2), so I guess I should have expected things to break. I just hadn't expected that things would break months later and behind my back.

Review

What went smoothly

My monitoring noticed the problem within minutes, so I am very happy about how quickly that went.

Deploying with

nixos-rebuild switch --target-hostwent extremely smoothly. The command built the right machine, deployed it to the right place, put the right grub configuration in place, and all services came up again.

What could have gone more smoothly

Investigate what the error tells you first, not last.

The error was pretty clearly telling me that there was an architecture issue, but I dismissed it as "that's probably not it". Instead I could have checked excluded that possibility first and I'd have found the problem sooner.

Fix the issue first, especially if it's faster, then fix the cause.

Once I found out the problem was NixOps building the wrong system, I could have immediately deployed using an alternative method like

nixos-rebuildinstead of trying to fix my NixOps configuration. This would have saved about an hour of downtime.

Next steps

Closely monitor why the machine locked up. The original problem is probably still there and I still have no idea what it was.

Switch away from

nixops. I will probably deploy infrastructure withterraformand software withnixos-rebuildor something similarly simple. I used NixOps because it can do infrastructure and software deployments together, but given that I not only have static infrastructure, but specifically only 1 server, it makes no sense to take on this extra complexity.Consider more frequently rebooting the machine to find this kind of problem earlier.

Timeline

12:30: Alarms go off saying that cs-syd.eu is unreachable.

12:45: Instance reboot.

13:00: I have a medical appointment.

14:30: I get back from my medical appointment and start investigating.

18:00: I deploy with an alternative deployment tool; all services are up again.

18:30: Informed a NixOps maintainer.

20:35: A NixOps maintainer submits a PR with a workaround

Acknowledgments

I owe big thanks to nh2, who spent at least two hours in a call with me to help me debug it. The outage would have taken many hours longer to fix without him.