This post announces the new mergeful library. In this third part, we describe how mergeful can help a server and its clients agree on a collection of values. This synchronisation mechanism will be the basis of a sync server for Smos.

Why

The original use-case for this library came from a sync server for Smos. In particular, such a sync server would need to be able to synchronise (smos) files and somehow resolve conflicts. I wanted to compartmentalise the complexity as much as possible, and keep the library generic in the values that would be synced. The smos sync server would then use (smos) files as the values in the collection.

How

Pieces of the puzzle

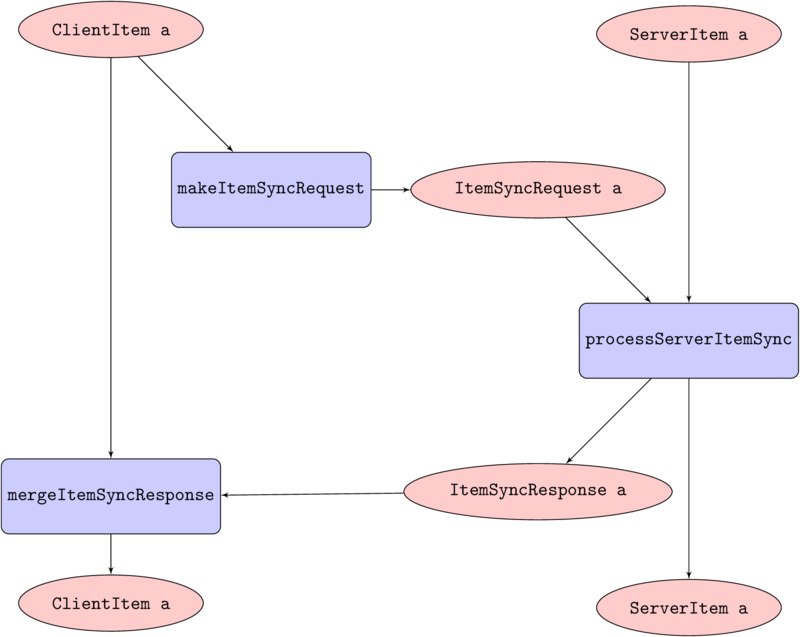

The workflow for syncing an item is very similar to the workflow for syncing a value. The only difference is that there is no separate initial request necessary. For reference, here is the entire flow again:

A central server and one or more clients want to cooperatively agree on zero or one value of type a. Clients store a ClientStore a value and the server stores a ServerStore a value. Clients regularly produce an SyncRequest a value from their ClientStore using a makeSyncRequest :: ClientStore a -> SyncRequest a function.

When the server receives an SyncRequest a value, it uses its ServerStore a and an processServerSync :: ServerStore a -> SyncRequest a -> (SyncResponse a, ServerStore a) function to produce an SyncResponse a and a new ServerStore a It then stores the new ServerStore a and sends the SyncResponse a back to the client.

When the client receives the SyncResponse a, it uses a mergeSyncResponse :: ClientStore a -> SyncResponse a -> ClientStore a function to update its ClientStore a to reflect the new synchronised state.

The following diagram should help:

More about the particulars of these types and functions later.

Rejected ideas

Agreeing on a collection sounds like a special case of agreeing on a value where the value is a collection. It is important to realise that in the typical case of syncing a collection, there will be few conflicts on the same value. However, if we regard the collection as a single value, even modifications that aren't strictly conflicting would be recorded as a conflict. Such a conflict would require the server to send over the entire collection again. Instead, we will add special support for synchronising collections, because it is a common case.

The mergeful solution

We will implement the syncing of collections on top of the syncing of items. In order to do that, without constantly creating duplicates, we must somehow give each item a name. Indeed, in order to compare a ClientItem with a ServerItem, we need to know which two in the collection to compare. The sollution that we've chosen is to use unique identifiers generated by the server. It is the server's responsibility to ensure that these identifiers are and remain unique. The server could, for example, use incremented identifiers (like in SQL) or randomly generated UUIDs.

Next, to define the ClientStore type, we will turn the ClientItem sum type into a product type:

data ClientStore i a =

ClientStore

{ clientStoreAddedItems :: Map ClientId a

-- ^ These items are new locally but have not been synced to the server yet.

, clientStoreSyncedItems :: Map i (Timed a)

-- ^ These items have been synced at their respective 'ServerTime's.

, clientStoreSyncedButChangedItems :: Map i (Timed a)

-- ^ These items have been synced at their respective 'ServerTime's

-- but modified locally since then.

, clientStoreDeletedItems :: Map i ServerTime

-- ^ These items have been deleted locally after they were synced

-- but the server has not been notified of that yet.

}Note that the added items do not have identifiers yet, because the server has not generated any for them. Instead the client needs to maintain a client-side identifier for it. This is just a simple newtype around a Word64. The rest of the items each already have a server identifer, and the validity constraint will ensure that there are no duplicates:

instance (Validity i, Ord i, Validity a) => Validity (ClientStore i a) where

validate cs@ClientStore {..} =

mconcat

[ genericValidate cs

, declare "There are no duplicate IDs" $

distinct $

concat $

[ M.keys clientStoreSyncedItems

, M.keys clientStoreSyncedButChangedItems

, M.keys clientStoreDeletedItems

]

]The 'SyncRequest' type looks similar again, again without the actual value for non-modified items:

data SyncRequest i a =

SyncRequest

{ syncRequestNewItems :: Map ClientId a

-- ^ These items are new locally but have not been synced to the server yet.

, syncRequestKnownItems :: Map i ServerTime

-- ^ These items have been synced at their respective 'ServerTime's.

, syncRequestKnownButChangedItems :: Map i (Timed a)

-- ^ These items have been synced at their respective 'ServerTime's

-- but modified locally since then.

, syncRequestDeletedItems :: Map i ServerTime

-- ^ These items have been deleted locally after they were synced

-- but the server has not been notified of that yet.

}The ServerStore can now actually look very simple. Just a collection of items, each with their unique identifier:

newtype ServerStore i a = ServerStore { serverStoreItems :: Map i (Timed a) }

The SyncResponse is actually very interesting, but it has been made easy by the work on syncing items. Indeed, we just need a field for every constructor in the ItemSyncResponse type:

data SyncResponse i a =

SyncResponse

{ syncResponseClientAdded :: Map ClientId (i, ServerTime)

-- ^ The client added these items and server has succesfully been made aware of that.

--

-- The client needs to update their server times

, syncResponseClientChanged :: Map i ServerTime

-- ^ The client changed these items and server has succesfully been made aware of that.

--

-- The client needs to update their server times

, syncResponseClientDeleted :: Set i

-- ^ The client deleted these items and server has succesfully been made aware of that.

--

-- The client can delete them from its deleted items

, syncResponseServerAdded :: Map i (Timed a)

-- ^ These items have been added on the server side

--

-- The client should add them too.

, syncResponseServerChanged :: Map i (Timed a)

-- ^ These items have been modified on the server side.

--

-- The client should modify them too.

, syncResponseServerDeleted :: Set i

-- ^ These items were deleted on the server side

--

-- The client should delete them too

, syncResponseConflicts :: Map i (Timed a)

-- ^ These are conflicts where the server and the client both have an item, but it is different.

--

-- The server kept its part of each, the client can either take whatever the server gave them

-- or deal with the conflicts somehow, and then try to re-sync.

, syncResponseConflictsClientDeleted :: Map i (Timed a)

-- ^ These are conflicts where the server has an item but the client does not.

--

-- The server kept its item, the client can either take whatever the server gave them

-- or deal with the conflicts somehow, and then try to re-sync.

, syncResponseConflictsServerDeleted :: Set i

-- ^ These are conflicts where the server has no item but the client has a modified item.

--

-- The server left its item deleted, the client can either delete its items too

-- or deal with the conflicts somehow, and then try to re-sync.

}Actual synchronization

The complex work of the actual synchronisation has already been done in the item synchronisation. The complexity of collection synchronisation itself comes from matching up client items and server items up in pains on the server side, and from merging the SyncResponse back into the ClientStore afterwards.

Creating the SyncRequest is easy. We just need to not send over the values that have not been modified:

makeSyncRequest :: ClientStore i a -> SyncRequest i a

makeSyncRequest ClientStore {..} =

SyncRequest

{ syncRequestNewItems = clientStoreAddedItems

, syncRequestKnownItems = M.map timedTime clientStoreSyncedItems

, syncRequestKnownButChangedItems = clientStoreSyncedButChangedItems

, syncRequestDeletedItems = clientStoreDeletedItems

}Processing a 'SyncRequest' on the server-side is tricky. This is a sketch of the solution with some helper functions are left out. These helper functions are left as an exercise to the reader, and the solutions can be found here.

processServerSync ::

forall i a m. (Ord i, Monad m)

=> m i -- ^ The action that is guaranteed to generate unique identifiers

-> ServerStore i a

-> SyncRequest i a

-> m (SyncResponse i a, ServerStore i a)

processServerSync genId ServerStore {..} sr@SyncRequest {..}

-- Make tuples of requests for all of the items that only had a client identifier.

= do

let unidentifedPairs :: Map ClientId (ServerItem a, ItemSyncRequest a)

unidentifedPairs = M.map (\a -> (ServerEmpty, ItemSyncRequestNew a)) syncRequestNewItems

-- Make tuples of results for each of the unidentifier tuples.

unidentifedResults :: Map ClientId (ItemSyncResponse a, ServerItem a)

unidentifedResults = M.map (uncurry processServerItemSync) unidentifedPairs

generatedResults <- generateIdentifiersFor genId unidentifedResults

-- Gather the items that had a server identifier already.

let clientIdentifiedSyncRequests :: Map i (ItemSyncRequest a)

clientIdentifiedSyncRequests = identifiedItemSyncRequests sr

-- Make 'ServerItem's for each of the items on the server side

serverIdentifiedItems :: Map i (ServerItem a)

serverIdentifiedItems = M.map ServerFull serverStoreItems

-- Match up client items with server items by their id.

thesePairs :: Map i (These (ServerItem a) (ItemSyncRequest a))

thesePairs = unionTheseMaps serverIdentifiedItems clientIdentifiedSyncRequests

-- Make tuples of server 'ServerItem's and 'ItemSyncRequest's for each of the items with an id

requestPairs :: Map i (ServerItem a, ItemSyncRequest a)

requestPairs = M.map (fromThese ServerEmpty ItemSyncRequestPoll) thesePairs

-- Make tuples of results for each of the tuplus that had a server identifier.

identifiedResults :: Map i (ItemSyncResponse a, ServerItem a)

identifiedResults = M.map (uncurry processServerItemSync) requestPairs

-- Put together the results together

let allResults :: Map (Identifier i) (ItemSyncResponse a, ServerItem a)

allResults =

M.union

(M.mapKeys OnlyServer identifiedResults)

(M.mapKeys (uncurry BothServerAndClient) generatedResults)

pure $ produceSyncResults allResultsMerging the SyncResponse back into the ClientStore is similarly tricky, and is left as an exercise to the reader again. The solution can be found here.

Testing the implementation

There are a few interesting aspects to the tests. In particular, apart from functionality regarding conlficts, all client-side merge functions should act in the same way. This means that we can make a test suite combinator for them:

mergeFunctionSpec ::

forall a. (Show a, Ord a, GenValid a)

=> (forall i. Ord i =>

ClientStore i a -> SyncResponse i a -> ClientStore i a)

-> SpecHere we are using {-# LANGUAGE RankNTypes #-} to describe that the caller can decide on the parameter a (the values to synchronise), but the callee can decide on the parameter i (the identifiers for the values). The callee (the test suite combinator), wants to both use pseudorandomly generated UUIDs and also specify that the type of the identifiers should not matter to the merge function.

To generate the UUIDs, the test suite uses a little state transformer monad:

newtype D m a =

D

{ unD :: StateT StdGen m a

}

deriving (Generic, Functor, Applicative, Monad, MonadState StdGen, MonadTrans, MonadIO)

evalD :: D Identity a -> a

evalD = runIdentity . evalDM

runD :: D Identity a -> StdGen -> (a, StdGen)

runD = runState . unD

evalDM :: Functor m => D m a -> m a

evalDM d = fst <$> runDM d (mkStdGen 42)

runDM :: D m a -> StdGen -> m (a, StdGen)

runDM = runStateT . unD

genD :: Monad m => D m UUID

genD = do

r <- get

let (u, r') = random r

put r'

pure uNote that, for the sake of test reproducibility, it is important that the seed of the random generator is in fact fixed. Here we chose 42.

The mergeFunctionSpec test suite combinator is called with three different merge functions:

mergeSyncResponseFromServermergeSyncResponseIgnoreProblemsA custom merge function that uses the GCounter CRDT to resolve merge conflicts:

describe "Syncing with mergeSyncResponseUsingStrategy with a GCounter" $

mergeFunctionSpec @Int $

mergeSyncResponseUsingStrategy

ItemMergeStrategy

{ itemMergeStrategyMergeChangeConflict =

\clientItem (Timed serverItem t) -> Timed (max clientItem serverItem) t

, itemMergeStrategyMergeClientDeletedConflict = \serverItem -> Just serverItem

, itemMergeStrategyMergeServerDeletedConflict = \_ -> Nothing

}We will not discuss every test here, but you can be sure that it is quite comprehensive. Here is a small overview

Data.Mergeful.Collection

initialServerStore

is valid

initialSyncRequest

is valid

emptySyncResponse

is valid

makeSyncRequest

produces valid requests

mergeAddedItems

produces valid results

mergeSyncedButChangedItems

produces valid results

mergeAddedItems

produces valid results

mergeSyncedButChangedItems

produces valid results

mergeDeletedItems

produces valid results

mergeSyncResponseIgnoreProblems

produces valid requests

processServerSync

produces valid tuples of a response and a store

Syncing with mergeSyncResponseIgnoreProblems

Single client

Multi-item

succesfully downloads everything from the server for an empty client

succesfully uploads everything to the server for an empty server

is idempotent with one client

Multiple clients

Single-item

successfully syncs an addition accross to a second client

successfully syncs a modification accross to a second client

succesfully syncs a deletion across to a second client

does not run into a conflict if two clients both try to sync a deletion

Multiple items

successfully syncs additions accross to a second client

succesfully syncs deletions across to a second client

does not run into a conflict if two clients both try to sync a deletion

does not lose data after a conflict occurs

Syncing with mergeSyncResponseFromServer

Single client

Multi-item

succesfully downloads everything from the server for an empty client

succesfully uploads everything to the server for an empty server

is idempotent with one client

Multiple clients

Single-item

successfully syncs an addition accross to a second client

successfully syncs a modification accross to a second client

succesfully syncs a deletion across to a second client

does not run into a conflict if two clients both try to sync a deletion

Multiple items

successfully syncs additions accross to a second client

succesfully syncs deletions across to a second client

does not run into a conflict if two clients both try to sync a deletion

mergeSyncResponseFromServer

only differs from mergeSyncResponseIgnoreProblems on conflicts

does not diverge after a conflict occurs

Syncing with mergeSyncResponseUsingStrategy with a GCounter

Single client

Multi-item

succesfully downloads everything from the server for an empty client

succesfully uploads everything to the server for an empty server

is idempotent with one client

Multiple clients

Single-item

successfully syncs an addition accross to a second client

successfully syncs a modification accross to a second client

succesfully syncs a deletion across to a second client

does not run into a conflict if two clients both try to sync a deletion

Multiple items

successfully syncs additions accross to a second client

succesfully syncs deletions across to a second client

does not run into a conflict if two clients both try to sync a deletion

References

The mergeful library is available on Hackage. Mergeful originated in the work on Smos, Intray and Tickler. This post is part of an effort to encourage contributions to Smos. The simplest contribution could be to just try out smos and provide feedback on the experience. Smos is a purely functional semantic forest editor of a subset of YAML that is intended to replace Emacs' Org-mode for Getting Things Done.