This post motivates and introduces Super User Spark, the Spark language and the spark tool. Assuming no previous knowledge, this post demonstrates how a beginner could use the Super User Spark.

The Super User Spark is a tool that allows you to manage your beautiful and precious system configuration accross multiple systems and failures. The Spark language is a domain specific language in which you can describe how you want to manage your dotfiles. spark is the command line utility that allows you to parse, format, compile, check and deploy your spark files.

Installing spark is as easy as running cabal install super-user-spark.

Demo

Imagine you are configuring a completely new system. The first thing you do on your new system is create some aliases in a new file: ~/.bash_aliases.

alias please='sudo'These aliases need to be sourced from .bashrc of course, so you add this line to your .bashrc file.

source ~/.bash_aliasesYou've now put some work into configuring your system. All of this work would be lost if you happen to have to reinstall the system because you broke it. To keep your dotfiles safe, you store them in a dotfiles repository:

~ $ mkdir dotfiles ~ $ cd dotfiles ~/dotfiles $ git init ~/dotfiles $ cp ~/.bashrc ~/.bash_aliases .

This works, but there is a problem. If you change any of these dotfiles, you have to copy them into the dotfiles repository again. Even worse, when you bring the dotfiles to a new system, you have to copy all the dotfiles out of your dotfiles directory to the right places. You decide to make symbolic links instead.

~ $ ln -s /home/user/dotfiles/.bashrc /home/user/.bashrc

Again: This works, but there is a problem. Making all these links is tedious. You could write a bash script but that gets tedious as well. There are a lot of edge cases to consider (for example if the destination already exists in some form) and a lot of the paths have commonalities.

This is where spark comes in. You can write a Spark card to configure where you want these dotfiles to be deployed.

~/dotfiles $ cat bash.sus

card bash {

into ~

.bashrc -> .bashrc

.bash_aliases -> .bash_aliases

}

You can probably guess what's happening here. This card is named bash. All the deployments go into the home directory. The ~/dotfiles/.bashrc file is linked to ~/.bashrc and the ~/dotfiles/bash_aliases file is linked to ~/.bash_aliases.

Running spark parse bash.sus will check the card for syntactic errors and spark format bash.sus will format the card nicely if you are too lazy to do that yourself. Running spark compile bash.sus will show you exactly how the dotfiles will be deployed:

~/dotfiles $ spark compile bash.sus "~/dotfiles/.bashrc" l-> "~/.bashrc" "~/dotfiles/.bash_aliases" l-> "~/.bash_aliases"

Running spark check bash.sus will show you information about the deployment of the card:

~/dotfiles $ spark check bash.sus ~/.bashrc: ready to deploy ~/.bash_aliases: ready to deploy

Finally, running spark deploy bash.sus will perform all the linking you expect. It is possible that spark deploy will tell you that the destination files already exist. In that case, spark doesn't assume anything and it won't do anything. You can run spark with the -r/--replace option to override the existing files/links.

Syntax

As you have probably noticed, writing .bashrc -> .bashrc is a bit tedious as well. spark has a shorthand syntax for this deployment:

card bash {

into ~

.bashrc

.bash_aliases

}

Dotfiles are often hidden when you perform ls without the -a/--all option. You could store your dotfiles without the dot: bashrc and have it be deployed to .bashrc as follows:

card bash {

into ~

bashrc -> .bashrc

bash_aliases -> .bash_aliases

}

Of course then you're stuck with the long-hand syntax again? No you are not! Because this is such a common use-case, you can just use the previous syntax again. spark will first look for the file with a dot and then for the file without a dot. Just make sure you don't also have a file with the dot in the name.

The final card could look like this:

card bash {

into ~

.bashrc

.bash_aliases

}

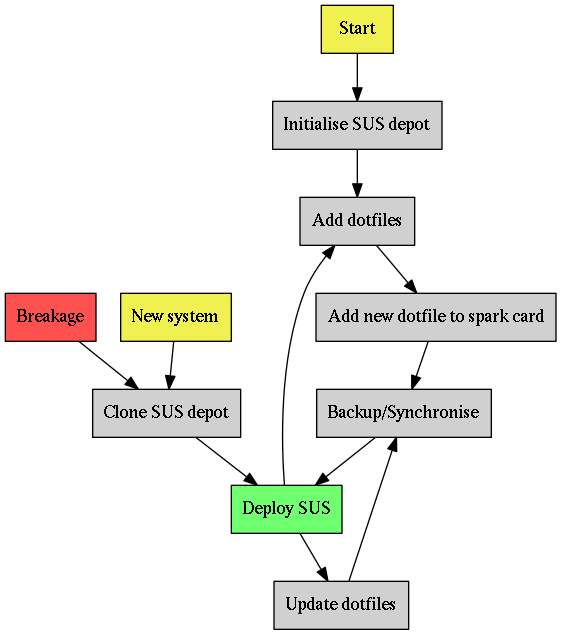

Workflow

You can now keep your beautifully configured system up to date and restore it after a breakage with one simple deployment. Your workflow concerning dotfiles could look like this:

If you found this interesting, have a look at part 2 of the spark tutorial series and part 3.