Test pollution is a major barrier to efficient and approachable testing practices. Luckily it is much easier to solve than we usually estimate.

What is test pollution

A test is considered polluting if there is a difference in the likelihood that it or other tests pass, if the test is not run, run in parallel, or run in a different order.

That's quite the mouthful, so let's look at more specific instances.

If test A fails iff it is run after test B is run, then test pollution has occurred.

If test A fails unless it is run after test B is run, then test pollution has also occurred.

If test A and test B both succeed when run one after the other, but not when run at the same time, then we speak of test pollution as well.

Why avoid test pollution

A few puzzle pieces seem to "magically" fall into place when one can fix test pollution.

Without test pollution, any two non-polluting tests can be run in any order. This means that test suites can (and should) be run in a random order. This can help find test pollution as well as help with the following:

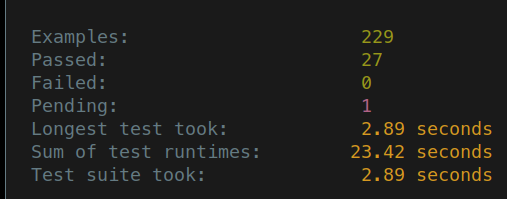

Without test pollution, any two non-polluting tests can be run at the same time. That means that tests can (and should) be run in parallel. This is great for finding thread safety issues, but also has the massive benefit that it speeds up test suite execution by an order of magnitude (the number of cores you use). Indeed, non-polluting tests are embarrasingly parallelisable.

It gets better: Because test runtimes are usually Pareto distributed, the total runtime of the test suite will now be closer to the maximum runtime of any test than to the sum of the runtimes of all the tests.

And it gets even better: because of the randomisation of execution order, tests are more likely to be nicely bin-packed into their parallelism.

Typical examples of test pollution and how to fix them

The examples that follow here will be written using the sydtest testing framework in Haskell, but you can diagnose and fix the same problem in other languages and with other frameworks as well.

Shared file

Here are two tests that write to the same file. They might be testing that the writeFile function does not crash on these two different inputs.

module SharedFileSpec (spec) where

import Test.Syd

spec :: Spec

spec = do

describe "Shared file" $ do

it "A" $ do

writeFile "hello" "world"

it "B" $ do

writeFile "hello" "cs-syd"One can run each of these tests separately (with --match=A and --match=B) and they will pass. They can also be run in any order, but when they are run in parallel, the test suite becomes flaky:

test/SharedFileSpec.hs:11

✗ 1 SharedFileSpec.Shared file.B

hello: openFile: resource busy (file is locked)

A similar problem occurs when one test writes to the file and the other tries to read it:

spec :: Spec

spec = do

describe "Shared file" $ do

it "A" $ do

writeFile "hello" "world"

it "B" $ do

readFile "hello" `shouldReturn` "world"Test B will succeed if it is run after Test A, but not if the file hello does not exist yet, and it will be flaky if the two are run at the same time.

In both cases we speak of test pollution.

To solve this problem, we can use a temporary directory for the test to store its files in:

module SharedFileSpec (spec) where

import Path

import Path.IO

import Test.Syd

spec :: Spec

spec =

describe "Shared file" $ do

it "A" $

withSystemTempDir "example" $ \tempDir -> do

filePath <- resolveFile tempDir "hello"

writeFile (fromAbsFile filePath) "world"

it "B" $

withSystemTempDir "example" $ \tempDir -> do

filePath <- resolveFile tempDir "hello"

writeFile (fromAbsFile filePath) "cs-syd"By doing this, a new temporary directory is created before, and cleaned up after, each test. This way, the tests can be run in any order and at the same time without sharing a file. As a result, no more test pollution occurs.

Repeating this setup and tear down infrastructure can be factored out using out using sydtest's concept of an inner resource:

module SharedFileSpec (spec) where

import Path

import Path.IO

import Test.Syd

spec :: Spec

spec =

describe "Shared file" $ do

around (withSystemTempDir "example") $ do

it "A" $ \tempDir -> do

filePath <- resolveFile tempDir "hello"

writeFile (fromAbsFile filePath) "world"

it "B" $ \tempDir -> do

filePath <- resolveFile tempDir "hello"

writeFile (fromAbsFile filePath) "cs-syd"sydtest has a combinator for this already:

module SharedFileSpec (spec) where

import Path

import Path.IO

import Test.Syd

import Test.Syd.Path

spec :: Spec

spec =

describe "Shared file" $

tempDirSpec "example" $ do

it "A" $ \tempDir -> do

filePath <- resolveFile tempDir "hello"

writeFile (fromAbsFile filePath) "world"

it "B" $ \tempDir -> do

filePath <- resolveFile tempDir "hello"

writeFile (fromAbsFile filePath) "cs-syd"The default port

When integration-testing a web server, we can run into problems with test pollution when the tests always use the default port (probably 8000 or 3000 or so).

In this example, two integration tests want to use the same port: 3000.

{-# LANGUAGE NumericUnderscores #-}

module SharedPortSpec (spec) where

import Control.Concurrent (threadDelay)

import Control.Concurrent.Async

import Network.HTTP.Types as HTTP

import Network.Wai as Wai

import Network.Wai.Handler.Warp as Warp

import Path

import Path.IO

import Test.Syd

spec :: Spec

spec = do

describe "Shared port" $ do

it "A" $ do

withAsync (Warp.run 3000 dummyApplication) $ \a -> do

link a

threadDelay 1_000_000 -- Some request-response test

it "B" $ do

withAsync (Warp.run 3000 dummyApplication) $ \a -> do

link a

threadDelay 1_000_000 -- Some request-response test

dummyApplication :: Wai.Application

dummyApplication = \request sendResponse -> do

let response = responseLBS HTTP.ok200 mempty mempty

sendResponse responseWhen we run either of these tests separately, they pass. When we run them both at the same time, however, we get the following failure:

test/SharedPortSpec.hs:21

✗ 1 SharedPortSpec.Shared port.B

ExceptionInLinkedThread ThreadId 20 Network.Socket.bind: resource busy (Address already in use)

This is a problem with test pollution.

Instead, we can use the testWithApplication function to have Warp use a new free port for every test:

spec :: Spec

spec = do

describe "Shared port" $ do

it "A" $ do

testWithApplication (pure dummyApplication) $ \port -> do

threadDelay 1_000_000 -- Some request-response test

it "B" $ do

testWithApplication (pure dummyApplication) $ \port -> do

threadDelay 1_000_000 -- Some request-response testWe can factor this out using an inner resource:

spec :: Spec

spec = do

describe "Shared port" $

around (testWithApplication (pure dummyApplication)) $ do

it "A" $ \port -> do

threadDelay 1_000_000 -- Some request-response test

it "B" $ \port -> do

threadDelay 1_000_000 -- Some request-response testsydtest-wai already has a combinator for this:

spec :: Spec

spec = do

describe "Shared port" $

waiSpec dummyApplication $ do

it "A" $ \port -> do

threadDelay 1_000_000 -- Some request-response test

it "B" $ \port -> do

threadDelay 1_000_000 -- Some request-response testOther examples

Other examples not mentioned here include:

Anything involving state that lives longer than the test

External APIs

Caches

Anything involving shared resources

The command-line process arguments

The process' current working directory

The process' environment variables

System ports

Conclusion

Avoid shared state or resources across tests. You can use your test framework to clean up before and after your tests, and to produce clean resources for them.

Use a sane testing framework that forces you to solve test pollution before it bites you, by:

running tests in parallel by default.

running tests in a random order by default.

If you cannot avoid test pollution (for example because you are writing an integration test that uses an external resource), don't use mocking but turn off parallelism and execution order randomisation locally instead.